Scale

Fri W3

Announcements

- Welcome to IDS-101-13: Thinking Machines!

- It's Friday of week 9

- Today we're doing something interesting I hope.

- "Can I teach them something cool?" -Shouvik

- An AI primer, pursuant to Week 2 deliverable: "Preliminary Analysis".

- Should be generically good for art people as well, if indirectly.

ML

- Whirlwind tour, some review, some novel

- Scale: Macronutrients

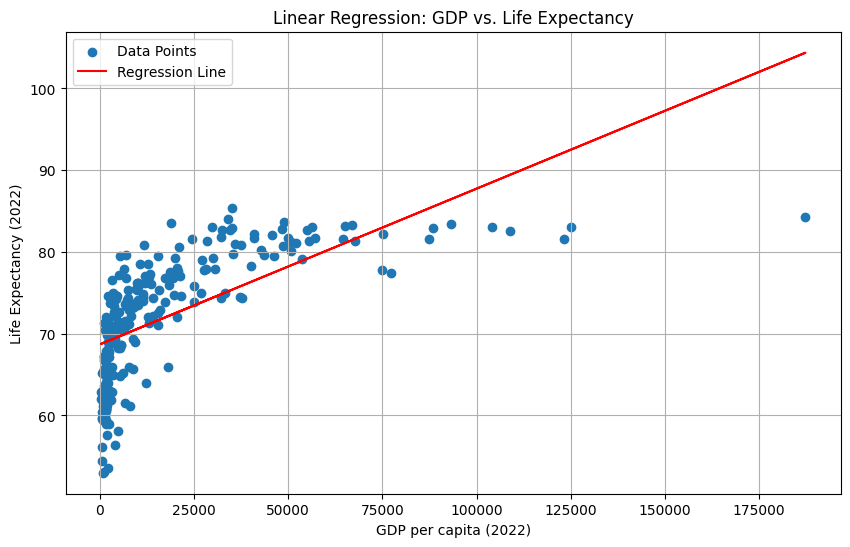

- Linear Regression: Wealth vs Life Expectancy

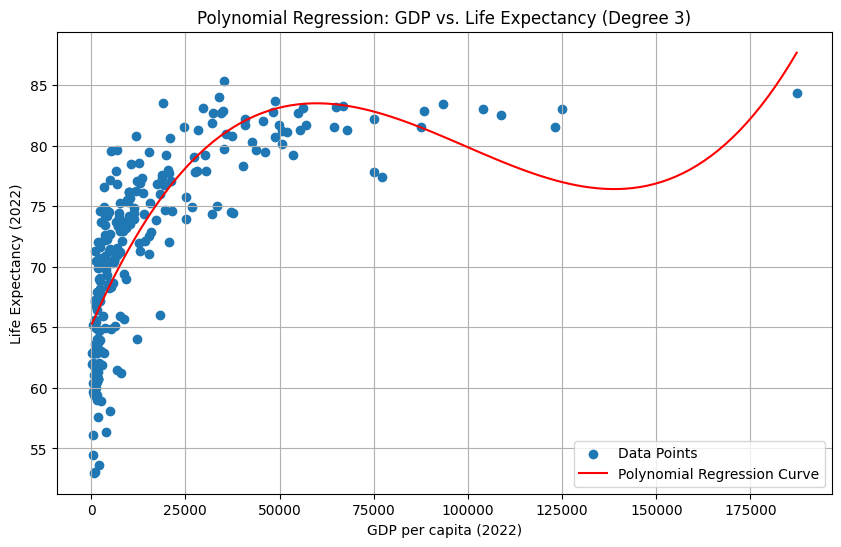

- Polynomial Regression: Wealth vs Life Expectancy

Scale

- Suppose we eat food.

- FDA keeps track of food because it's the government or something.

- Many people eat food for calories, a unit of energy.

- Most calories come from protein, carbohydrates or fats.

- Proteins and carbohydrates provide 4 calories per gram

- Fats provide 9 calories per gram

"Do it for the gram." - me

Scale

- We probably need to divide by cals/gram.

protein_value fat_value carbohydrate_value

0 3.47 8.37 4.07

1 4.27 8.79 3.87

2 2.44 8.37 3.57

3 2.44 8.37 3.57

4 2.44 8.37 3.57

.. ... ... ...

307 2.44 8.37 3.57

308 2.44 8.37 3.57

309 2.44 8.37 3.57

310 2.44 8.37 3.57

311 2.44 8.37 3.57

[312 rows x 3 columns]

Scale

- We get...

protein_value fat_value carbohydrate_value 0 0.216875 0.103333 0.254375 1 0.266875 0.108519 0.241875 2 0.152500 0.103333 0.223125 3 0.152500 0.103333 0.223125 4 0.152500 0.103333 0.223125 - Numbers much closer together.

df['fat_value'] = df['fat_value'] / 9

df['carbohydrate_value'] = df['carbohydrate_value'] / 4

df['protein_value'] = df['protein_value'] / 4

print(df[:5])

ML

- Whirlwind tour, some review, some novel

- ✓ Scale: Macronutrients

- Linear Regression: Wealth vs Life Expectancy

- Polynomial Regression: Wealth vs Life Expectancy

Linear Regression

- People with more money might live longer, I don't know.

- Sure seems like it'd help.

- Is this true of countries?

Linear Regression

- I didn't preserve this, but x is gdp and y is life expectancy.

2022_x 2022_y 0 33300.838819 74.992000 1 1642.432039 62.899031 2 352.603733 62.879000 3 1788.875347 57.626176 4 2933.484644 61.929000 .. ... ... 260 3745.560367 72.598000 261 5290.977397 79.524000 263 6766.481254 61.480000 264 1456.901570 61.803000 265 1676.821489 59.391000 - Let's take a linear regression.

Linear Regression

# prompt: sklearn linear regression over dataframe "both"

from sklearn.linear_model import LinearRegression

# Prepare the data for linear regression

X = both[['2022_x']] # GDP per capita in 2022

y = both['2022_y'] # Life expectancy in 2022

# Create and train the linear regression model

model = LinearRegression()

model.fit(X, y)

# Print the model coefficients

print("Coefficients:", model.coef_)

print("Intercept:", model.intercept_)

Linear Regression

- We get...

Coefficients: [0.00019047] Intercept: 68.69969966270624 - Let's plot it.

- Oh that is high key not linear.

ML

- Whirlwind tour, some review, some novel

- ✓ Scale: Macronutrients

- ✓ Linear Regression: Wealth vs Life Expectancy

- Polynomial Regression: Wealth vs Life Expectancy

Polynomial Regression

- That looked like a little money helped a lot, and a lot more money helped very little.

- Lines don't do that, they just 📈

- Let's try a parabola - a polynomial of degree 2.

- Well actually a parabola will go up then down, so I did degree 3 whoops.

# prompt: do polynomial regression of degree 3 over both # Create polynomial features up to degree 3 from sklearn.preprocessing import PolynomialFeatures poly = PolynomialFeatures(degree=3) X_poly = poly.fit_transform(X) # Create and train the polynomial regression model model_poly = LinearRegression() model_poly.fit(X_poly, y) # Print the model coefficients print("Polynomial Coefficients:", model_poly.coef_) print("Polynomial Intercept:", model_poly.intercept_) - Let's see it.

Polynomial Regression

- We get...

Polynomial Coefficients: [ 0.00000000e+00 7.16975595e-04 -8.57111644e-09 2.87583542e-14] Polynomial Intercept: 65.12557554366506 - Let's plot it.

- Oh that is high key not polynomial either lol.

ML

- Whirlwind tour, some review, some novel

- ✓ Scale: Macronutrients

- ✓ Linear Regression: Wealth vs Life Expectancy

- ✓ Polynomial Regression: Wealth vs Life Expectancy

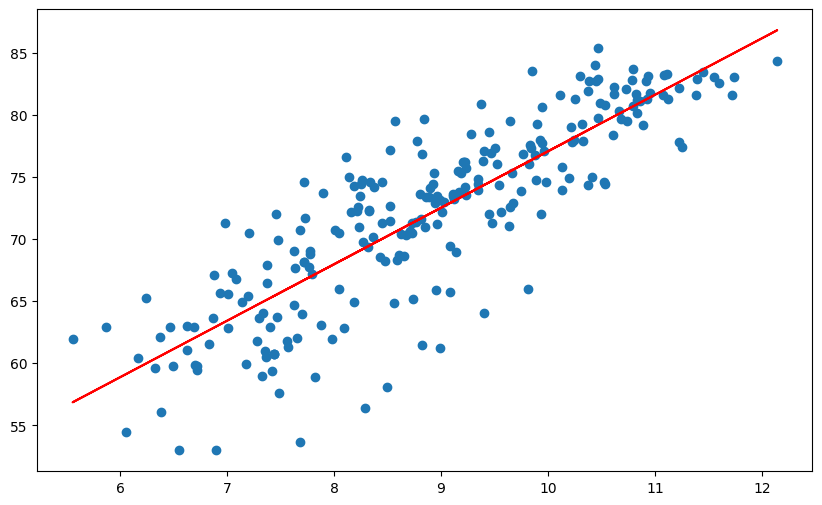

Scale

- Suppose a little bit of something (money) helps a lot.

- But a lot doesn't help anymore.

- Think: Going from 99 to 100 thousand dollars isn't as impactful as going from 0 to 1.

- Usually that's a logarithm.

Linear Regression

- We get...

Coefficients: [4.54777158] Intercept: 31.58450749077179 - Let's plot it.

- Yeah that's a log.

Colab Link

- Link

- If we have time I'll demo the wrangling.