(Un)supervision

AI 101

Approaches

Different Approaches to AI

If we want a computer to behave like a human, we need somehow to model inside a computer our way of thinking. Consequently, we need to try to understand what makes a human being intelligent.

To be able to program intelligence into a machine, we need to understand how our own processes of making decisions work. If you do a little self-introspection, you will realize that there are some processes that happen subconsciously – eg. we can distinguish a cat from a dog without thinking about it - while some others involve reasoning.

Different Approaches to AI

| Top-down Approach (Symbolic Reasoning) | Bottom-up Approach (Neural Networks) |

|---|---|

| A top-down approach models the way a person reasons to solve a problem. It involves extracting knowledge from a human being, and representing it in a computer-readable form. We also need to develop a way to model reasoning inside a computer. | A bottom-up approach models the structure of a human brain, consisting of a huge number of simple units called neurons. We can train a network of neurons to solve useful problems by providing training data. |

Different Approaches to AI

There are also some other possible approaches to intelligence:

- An Emergent, Synergetic or multi-agent approach are based on the fact that complex intelligent behaviour can be obtained by an interaction of a large number of simple agents. According to evolutionary cybernetics, intelligence can emerge from more simple, reactive behaviour in the process of metasystem transition.

- An Evolutionary approach, or genetic algorithm is an optimization process based on the principles of evolution.

Aside: CSAIL

The Top-Down Approach

- In a top-down approach, we try to model our reasoning.

- Because we can follow our thoughts when we reason, we can try to formalize this process and program it inside the computer.

- This is called symbolic reasoning, one example is grade-school algebra

The Top-Down Approach

- People tend to have some rules in their head that guide their decision making processes.

- At least according to them (go observe rush hour drivers for example)

- For example, when a doctor is diagnosing a patient, they may realize that a person has a fever, and thus there might be some inflammation going on inside the body.

- By applying a large set of rules to a specific problem a doctor may be able to come up with the final diagnosis.

The Top-Down Approach

- This approach relies heavily on knowledge representation and reasoning.

- Extracting knowledge from a human expert might be the most difficult part, because a doctor in many cases would not know exactly why they are coming up with a particular diagnosis.

- Sometimes the solution just comes up in their head without explicit thinking.

- Some tasks, such as determining the age of a person from a photograph, cannot be reduced to manipulating knowledge.

Bottom-Up Approach

- Alternately, we can try to model the simplest atomic elements inside our brain – a neuron.

- Obviously there are simpler elements (e.g. chemicals) but they take on different meaning in when not considered in isolation.

- Therefore they are not atomic, or indivisible units of “brain”.

Bottom-Up Approach

- We can construct a so-called artificial neural network inside a computer, and then try to teach it to solve problems by giving it examples.

- Allegedly this process is similar to how a newborn child learns about their surroundings by making observations.

- Simply program a computer to pretend to be however we think brains work.

- Of note, in some sense it doesn’t matter if we model the brain correctly or not.

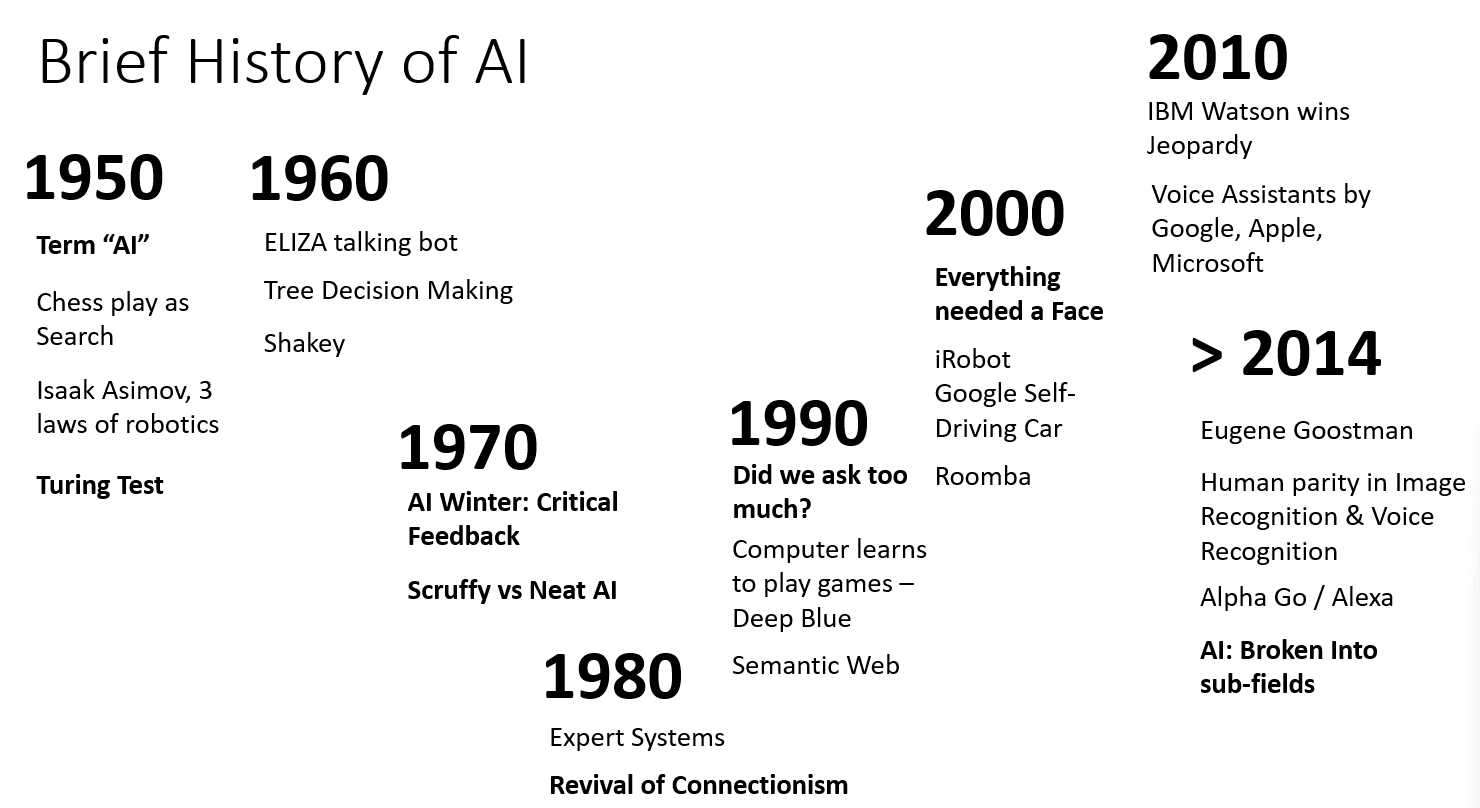

A Brief History of AI

- Artificial Intelligence was started as a field in the middle of the twentieth century.

- Initially, symbolic reasoning was a prevalent approach, and it led to a number of important successes, such as expert systems – computer programs that were able to act as an expert in some limited problem domains.

- However, it soon became clear that such approach does not scale well.

Problems arise

- Extracting the knowledge from an expert, representing it in a computer, and keeping that knowledgebase accurate turns out to be a very complex task, and too expensive to be practical in many cases.

- This led to so-called AI Winter in the 1970s.

Image by Dmitry Soshnikov

Computers Emerge

- As time passed…

- Computing resources became cheaper, and

- More data has become available…

- So neural network approaches started demonstrating great performance in competing with human beings in many areas, such as computer vision or speech understanding.

In the last decade, the term Artificial Intelligence has been mostly used as a synonym for Neural Networks, because most of the AI successes that we hear about are based on them.

Chess

We can observe how the approaches changed, for example, in creating a chess playing computer program:

- Early chess programs were based on search – a program explicitly tried to estimate possible moves of an opponent for a given number of next moves, and selected an optimal move based on the optimal position that can be achieved in a few moves. It led to the development of the so-called alpha-beta pruning search algorithm.

Chess

We can observe how the approaches changed, for example, in creating a chess playing computer program:

- Search strategies work well toward the end of the game, where the search space is limited by a small number of possible moves. However, at the beginning of the game, the search space is huge, and the algorithm can be improved by learning from existing matches between human players.

- Subsequent experiments employed so-called case-based reasoning, where the program looked for cases in the knowledge base very similar to the current position in the game.

Chess

We can observe how the approaches changed, for example, in creating a chess playing computer program:

- Modern programs that win over human players are based on neural networks and reinforcement learning, where the programs learn to play solely by playing a long time against themselves and learning from their own mistakes – much like human beings do when learning to play chess.

- However, a computer program can play many more games in much less time, and thus can learn much faster.

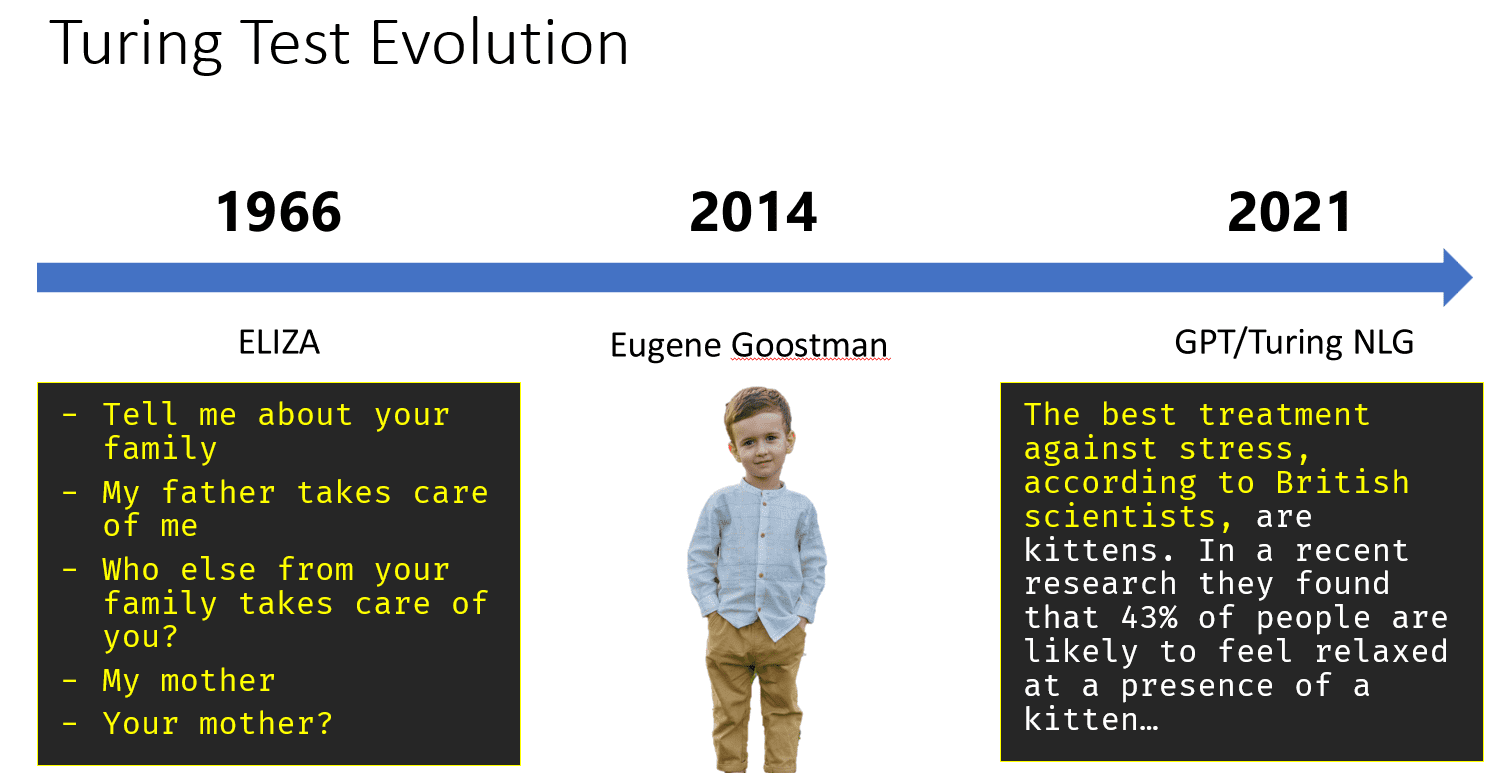

Chatbots

Similarly, we can see how the approach towards creating “talking programs” (that might pass the Turing test) changed:

- Early programs of this kind such as Eliza, were based on very simple grammatical rules and the re-formulation of the input sentence into a question.

Chatbots

Similarly, we can see how the approach towards creating “talking programs” (that might pass the Turing test) changed:

- Modern assistants, such as Cortana, Siri or Google Assistant are all hybrid systems that use neural networks to convert speech into text and recognize our intent, and then employ some reasoning or explicit algorithms to perform required actions.

Chatbots

Similarly, we can see how the approach towards creating “talking programs” (that might pass the Turing test) changed:

- Depending on who you ask, this is where we are now.

- If you ask me, “no”.

- My job is to know how to answer this question.

- If you ask Sam Altman, “yes”.

- Sam Altman’s job is convincingly say “yes” when asked this question.

- If you ask me, “no”.

Image by Dmitry Soshnikov, photo by Marina Abrosimova, Unsplash

Boom

Recent AI Research

- The huge recent growth in neural network research started around 2010, when two things happened.

- More compute.

- More data.

More compute

- A graduate students at UToronto affiliated with Prof. Hinton decided to use a GPU (graphics processing unit) instead of CPU (central processing unit) for image classification.

UToronto

- This is the first documented usage of a graphics-intended compute device for general purpose - or perhaps general AI - usage - of which I am aware.

- This is not super well-documented and only something I became aware of “through the grapevine”.

- As an architecture researcher (e.g. I study GPU vs. CPU) I tend to think it’s a big deal.

- Read more here perhaps?

More data

- Genius 李飞飞 (Fei-Fei Li, my 🐐) said (paraphrasing) “why don’t we just label as many images as possible and see what happens”.

- A huge collection of images called ImageNet, which contains around 14 million annotated images, and led to the ImageNet Large Scale Visual Recognition Challenge.

The world changed quickly

Going forward

- Since then, Neural Networks demonstrated very successful behaviour in many tasks:

| Year | Human Parity (nominally) achieved |

|---|---|

| 2015 | Image Classification |

| 2016 | Conversational Speech Recognition |

| 2018 | Automatic Machine Translation (Chinese-to-English) |

| 2020 | Image Captioning |

Supervision

Motivating Questions

- What is difference between supervised, and reinforcement learning?

- What are supervised, and reinforcement learning used for?

- What are some challenges with supervised and unsupervised learning?

Supervised Learning

- Uses labeled data.

- Maps inputs to outputs.

- Goal: Predict outcomes.

- Examples: Classification, regression.

Unsupervised Learning

- Uses unlabeled data.

- Finds hidden patterns.

- Goal: Discover structure.

- Examples: Clustering, dimensionality reduction.

The Label Divide

- Supervised: Labeled data.

- Unsupervised: Unlabeled data.

- Label presence is key.

Uses of Unsupervised

- Clustering: Grouping similar data.

- E.g. discover Yemenia novel coffee varietal

- Learn more

- 18 min + can’t watch on stream.

- Anomaly detection: Finding outliers.

- Dimensionality reduction: Simplifying data.

- Association rule learning: Finding relationships.

Clustering in Action

Anomaly Detection

- Detect fraudulent bank transactions.

- E.g. if I purchased an espresso in Salem, MA

- Detect anomalous (computer) network behavior.

- My colleague at MS does this

- Predict imminent equipment failure in e.g. manufacturing sector.

Challenges

Challenges

- Evaluation

- No clear “right” answer.

- And sometimes there’s someday a right answer, but you have to make the model now.

- Sometimes the “right” answer is determined by someone in power.

- Subjective evaluation metrics.

- Non-falsifiable by construction.

- Validating discovered patterns.

- No clear “right” answer.

Challenges

- Computational Cost

- Large datasets are common.

- Algorithms can be complex.

- Scalability is a concern.

- Computational costs become operating costs

Takeaways

- Supervised: Labeled data ⇒ predict label.

- Unsupervised: Unlabeled data ⇒ discover patterns.

- Valuable, but challenging.

- Value is correlated with challenge given scarcity.

Reinforcement Learning

The Third Way

- Supervised Learning: Learning from labeled data.

- Unsupervised Learning: Discovering patterns in unlabeled data.

- Reinforcement Learning: Learning through interactions with an environment.

1. Supervised Learning

The model is trained on a labeled dataset, meaning each input has a corresponding output.

- Labeled Data: Training data has predefined labels.

- Types of Problems: Used for classification (e.g., spam detection) and regression (e.g., predicting house prices).

2. Unsupervised Learning

The model identifies patterns, clusters, or associations independently without predefined labels.

- Unlabeled Data: No predefined outputs.

- Types of Problems: Used for clustering (e.g., customer segmentation) and association (e.g., market basket analysis).

3. Reinforcement Learning (RL)

Involves an agent that interacts with an environment, learning through rewards and penalties to maximize long-term success.

- Interaction-Based Learning: The agent learns by taking actions and receiving feedback.

- No Labeled Data: Learns from trial and error.

- Just like us!

Comparison Table

| Criteria | Supervised Learning | Unsupervised Learning | Reinforcement Learning |

|---|---|---|---|

| Definition | Learns from labeled data | Identifies patterns in unlabeled data | Learns through interaction with environment |

Comparison Table

| Criteria | Supervised Learning | Unsupervised Learning | Reinforcement Learning |

|---|---|---|---|

| Type of Data | Labeled data | Unlabeled data | No predefined data; learn from environment |

Comparison Table

| Criteria | Supervised Learning | Unsupervised Learning | Reinforcement Learning |

|---|---|---|---|

| Type of Problems | Classification, Regression | Clustering, Association | Sequential decision-making |

Comparison Table

| Criteria | Supervised Learning | Unsupervised Learning | Reinforcement Learning |

|---|---|---|---|

| Supervision | Requires external supervision | No supervision | No supervision; learns from feedback |

Comparison Table

| Criteria | Supervised Learning | Unsupervised Learning | Reinforcement Learning |

|---|---|---|---|

| Goal | Predict outcomes accurately | Discover hidden patterns | Optimize actions for maximum rewards |

Comparison Table

| Criteria | Supervised Learning | Unsupervised Learning | Reinforcement Learning |

|---|---|---|---|

| Applications | Medical diagnosis, fraud detection | Customer segmentation, anomaly detection | Self-driving cars, robotics, gaming |

Supervised Learning

| Domain | Examples |

|---|---|

| Healthcare | Disease diagnosis (e.g., cancer detection) |

| Finance | Loan approval, credit risk assessment |

| NLP | Sentiment analysis, text classification |

Comparison Table

| Domain | Examples | |

|---|---|---|

| E-commerce | Product recommendation, customer segmentation | |

| Cybersecurity | Fraud detection, intrusion detection | |

| Biology | Gene classification, dimensionality reduction |

Comparison Table

| Domain | Examples | |

|---|---|---|

| Autonomous Driving | Self-driving cars learning optimal behavior | |

| Robotics | Training robots for automated assembly tasks | |

| Gaming | AI-driven strategy games like AlphaGo |

Choosing the Right Approach

- Supervised Learning: Use when labeled data is available for prediction tasks.

- Unsupervised Learning: Use when exploring data structures without predefined labels.

- Reinforcement Learning: Use when decision-making is required in a dynamic environment.

Comments